Stable Diffusion online AI

Artificial Intelligence Accessible to Everyone for free

Free Image Generation tools online

Free Artificial Intelligence and LLM toolbox :

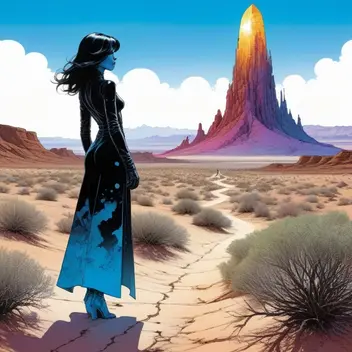

Artwork from Community

_poster_with_the_face_of_a_robot,_featuring_a_100000_buzz_reward,_issued_by_civitai_county.webp)

What is Stable Diffusion ?

Stable Diffusion is a text-to-image Artificial Intelligence. It generates images from a simple description in natural language.

Developed by StabilityAI , it is open source. Unlike models like DALL-E or Midjourney, it can run on regular GPUs. SD community is very active. A lot of fine-tuned models and add-ons are available. You can even train a model with your own data !

Stable Diffusion uses part of the LAION Aesthetics dataset. It’s trained to make images that match text prompts. This model can make unique digital art. It can also do other things like animations and image manipulation.

You can see examples in our prompt gallery, Art Gallery and image browser.Frequently Asked Questions

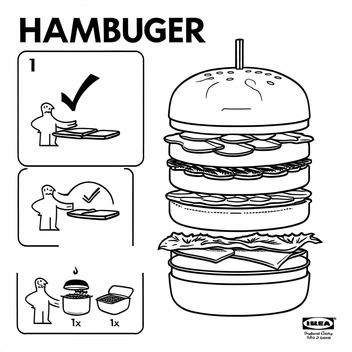

What is a prompt ?

A prompt is a sentence, usually 350 characters or less (75 tokens), that describes the image you want to generate.

Negative prompt is a simple way to specify what should not appear in generated image.

What does seed mean ?

The seed is a number that starts the creation process. You don’t have to make this number yourself, it’s made randomly if you don’t choose one (usually by choosing -1).

But if you control the seed, you can make the same images again, try different settings, or change the prompt.

What is LoRA ?

LoRA means Low Rank Adaptation. It’s a set of small extensions that tweak base models.

You can use it to adjust Stable Diffusion for a certain style or topic. You can mix many LoRAs in one prompt with different weights. This opens up endless possibilities for creation.

Can I install Stable Diffusion locally ?

Yes ! a GPU with at least 6Gb memory (NVRAM) is required.

You may use customized models trained by the community, fine-tune results with LoRA and much more.

How to install Stable Diffusion locally ?

First, get the SDXL base model and refiner from Stability AI.Next, make sure you have Pyhton 3.10 and Git installed.

Then, download and set up the webUI from Automatic1111.

Put the base and refiner models in this folder: models/Stable-diffusion under the webUI directory.

Find webui.bat in the main webUI folder and double-click it. Wait for it to install.

Finally, open your web browser and go to your local host on port 8760 to use Stable Diffusion.

What is the newest version of Stable Diffusion ?

On October 23, 2024, Stability AI released Stable Diffusion 3.5 . It is their most capable text-to-image model with great impovement with spelling abilities, performance and quality.